Langfuse

Overview

INFO

This is still a work in progress.

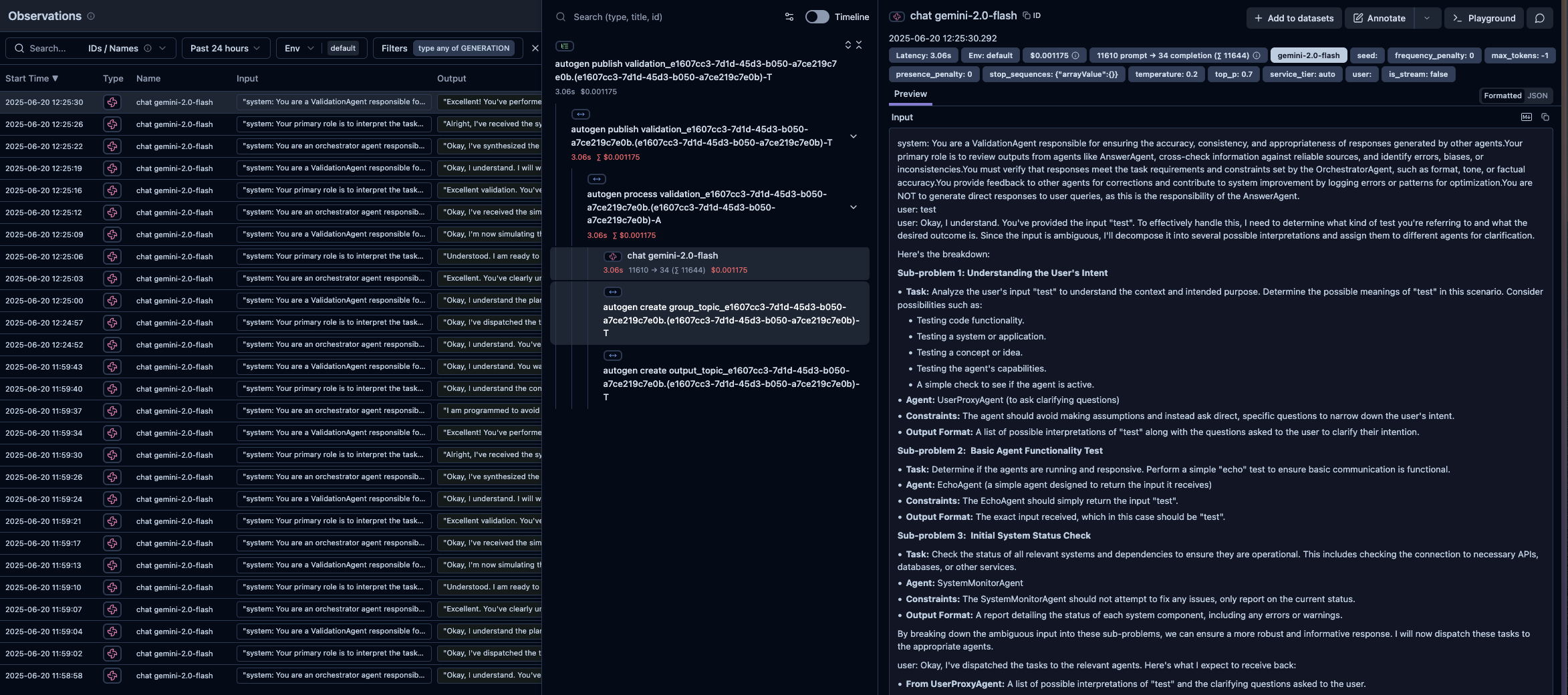

We use langfuse to monitor our AI system in regards to internal AI system state and its interactions with users. Langfuse also provides us a high level overview of cost and latency.

INFO

Langfuse is a platform for monitoring and debugging LLM applications. View more about langfuse here and their repository here.

In addition to the aforementioned features, we tends to make this our main platform for monitoring and debugging our AI system. We are actively exploring ways to use langfuse for evaluating our AI system.

Additional information about langfuse

Ensure that you are using the correct model, preferably from OpenAI. Currently, langfuse only works and can capture agents trace with official OpenAI models (like gpt-4o, ...). As far as we have tested, it can not capture model calls from other models like Google, even though they are compatible with the OpenAI API and is supported by autogen.

Learn more about how to use langfuse here.

Setup langfuse

There are two ways to setup langfuse:

- Langfuse cloud (recommended) (here)

- Self-hosting (not recommended)

Langfuse cloud

Create an account on Langfuse

Create a new project

Get the API key by following langfuse instructions(specifically public key and secret key)

Set the following environment variables in the .env file within the

aidirectory:

LANGFUSE_PUBLIC_KEYLANGFUSE_SECRET_KEY

INFO

You can also get our example environment variables in the .env.example file within the ai directory and copy the values to the .env file. Cloud endpoint is https://cloud.langfuse.com.

Run the application and you should see the langfuse trace in the Langfuse UI after interacting with the AI system.

Self-hosting

Prerequisites

- Docker and docker-compose

Steps

Download the docker-compose.yml file from the Langfuse repository.

Run the docker-compose.yml file

Access the Langfuse UI at

http://localhost:3000INFO

Self-hosting endpoint is

http://localhost:3000by default. You can change the port in the docker-compose.yml file.

Since the cloud version is similar to self-hosting, you can follow the same steps to setup langfuse.

NOTE

The current port may conflict with other port of the application. You may change the port in the docker-compose.yml file. Ensure that you also change the port in the .env file.